”choked by AI”

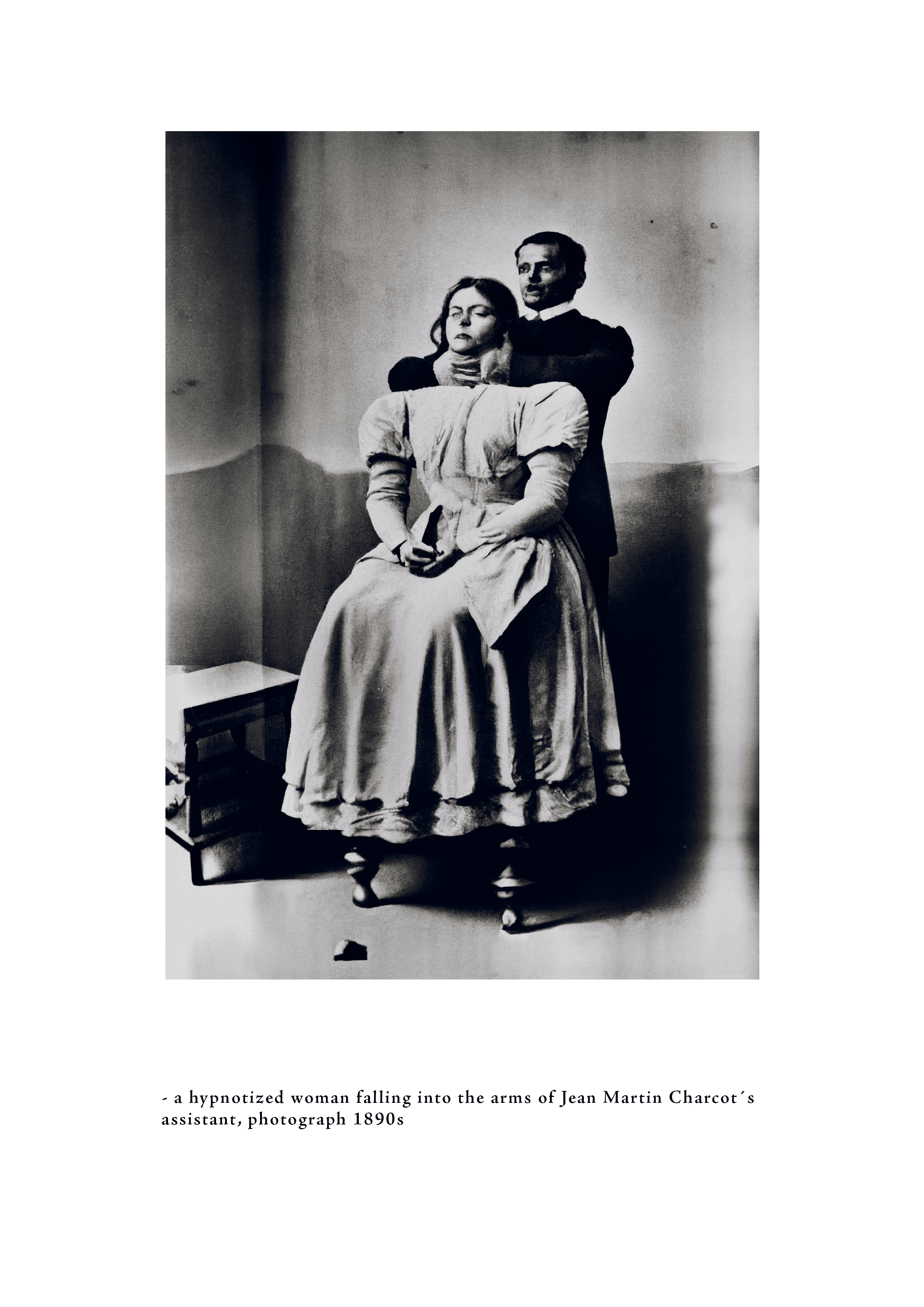

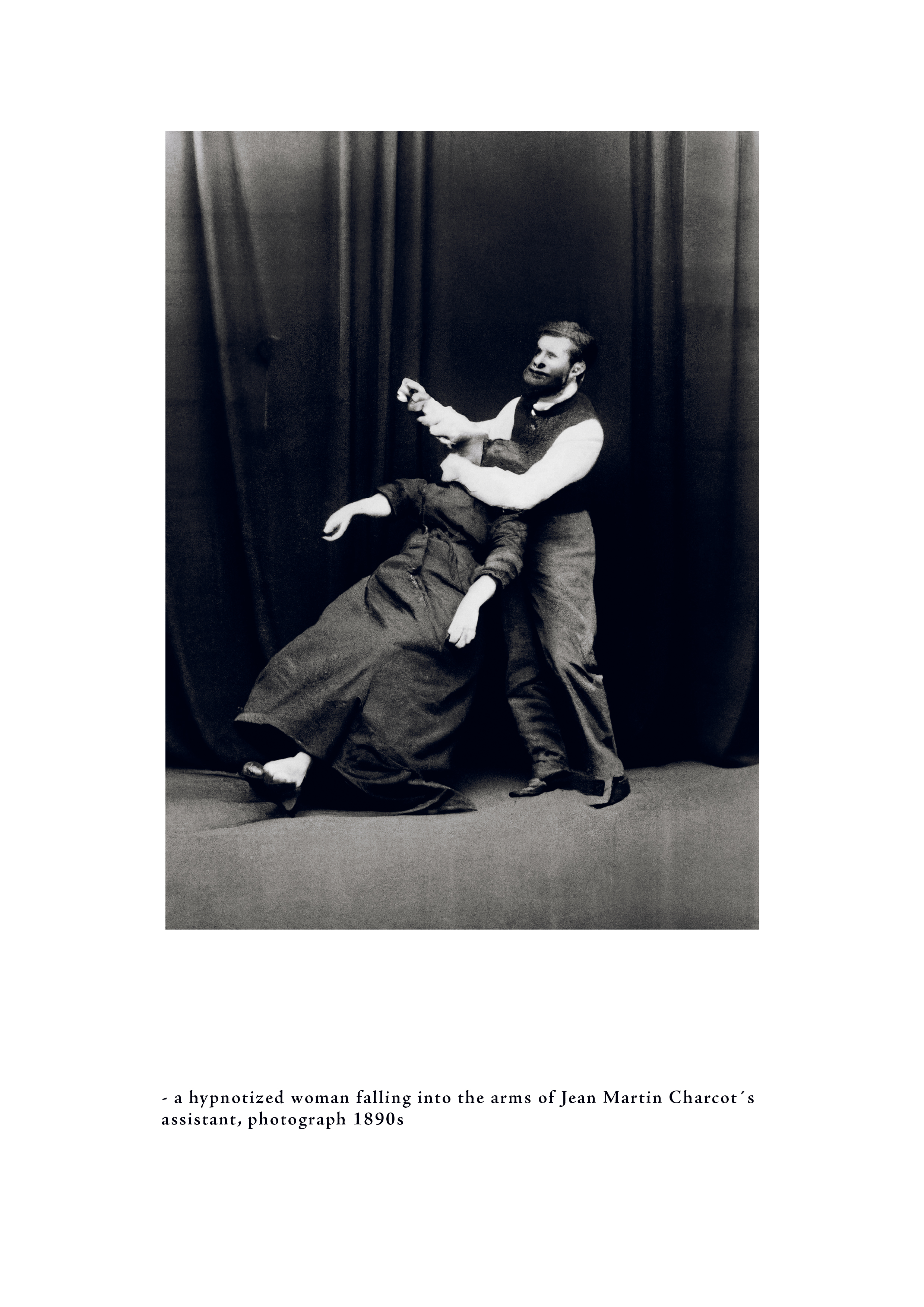

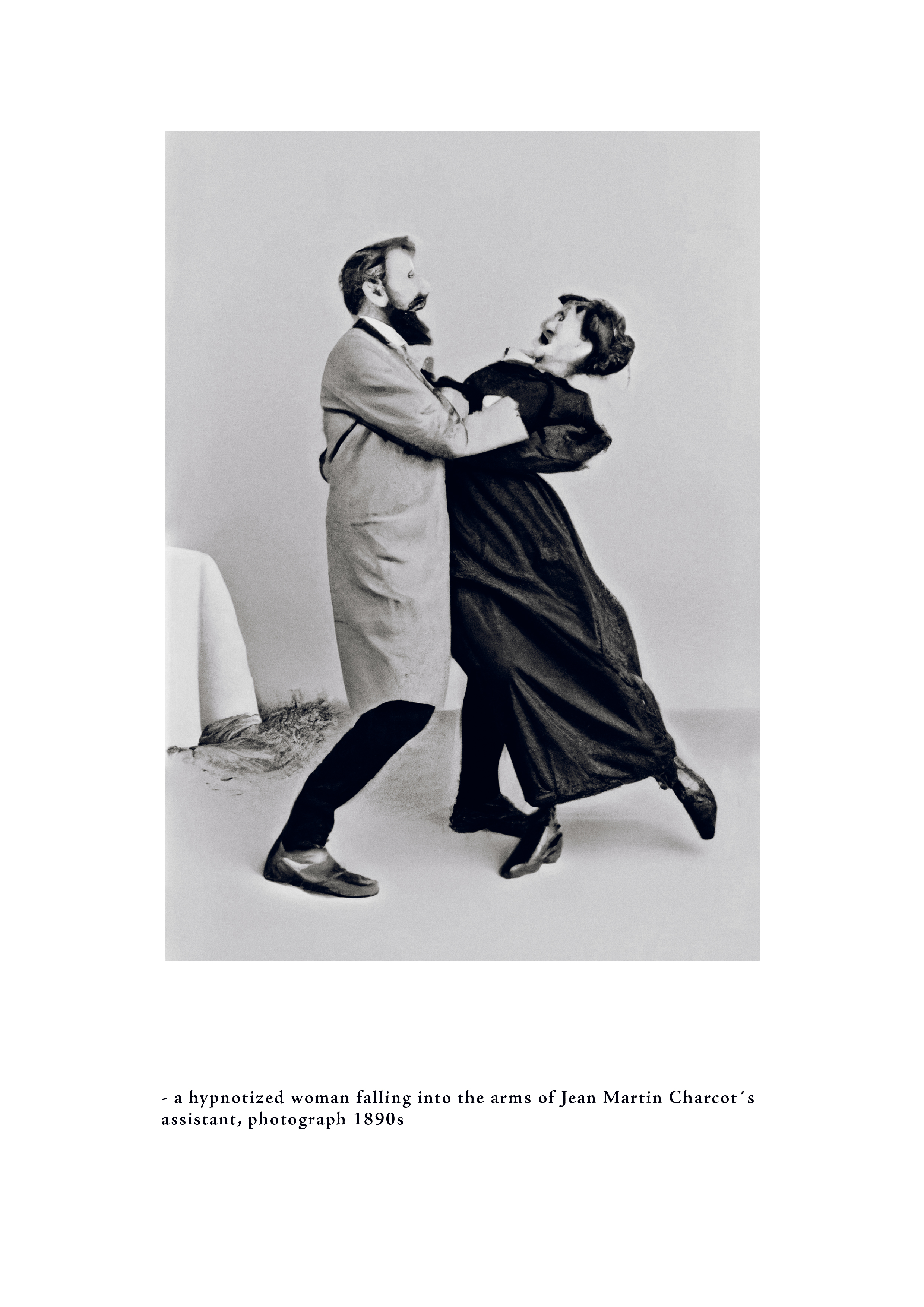

For this set of AI-based photo-like imagery, I worked with artificial intelligence and this prompt:

- a hypnotized woman falling into the arms of Jean Martin Charcot´s assistant, photograph 1890s

The disgorged square image, was then expanded via ”outpainting“ to create a scene instead of working with an excerpt only. I chose 10 images which all depicted a violent scene, in which the docotor attacks or strangles the female patient.

How does automatic image censorship trained by human eyes and human conscience work, if the machine apparently independently concocts brutal scenes through traditional images of supposedly credible photographic results? Do humans as viewers then have to report back these image results, do we as ethical beings have to teach the machine that such scenes are unacceptable and unacceptable? Is it possible that we might still be the better judges of what is or isn´t visually appropriate?

Does the AI act like a socially priviledged person, who has an idea of technology and culture that is appropriate to his social structure, but just not enough that he doesn‘t understand the sucked-in original images as intersectional and problematic?

How brutal may image results be that have been shaken together from supposedly harmless material?

I asked ChatGPT the following question:

Why does AI create photos in which a doctor is choking women?

The answer was:

“It‘s important to note that AI models themselves do not have an intention or desire to create such images. Rather, they are trained on large datasets of images and other data, and can generate new images based on the patterns and associations they have learned from that data.

It is concerning and unacceptable that AI-generated images depicting violence against women exist. Such images are likely the result of biased or inappropriate training data, or the result of deliberate efforts to manipulate the AI model to produce such content.”

10 inkjet prints on Hahnemühle Photo Rag, 21 x 29,7cm, edition of 5 (+2AP)